Congélateur armoire HOTPOINT UH8F1CX1 - Pose libre - 260 L - Froid ventilé No frost - L 59,5 x H 187,5 cm - Inox - Achat / Vente congélateur porte Congélateur armoire

Congélateur armoire silver discount - Magasin d'électroménager pas cher près de Libourne - Comptoir Electro Ménager

Whirlpool wva35642nfw2 - congélateur armoire - 344l - froid ventilé no frost - l 71 x h 187 cm - blanc - La Poste

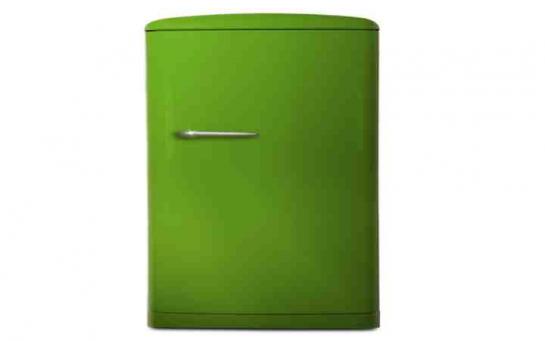

![Congélateur armoire froid ventilé: les meilleurs produits de [year] - congelateurarmoire.org Congélateur armoire froid ventilé: les meilleurs produits de [year] - congelateurarmoire.org](https://www.congelateurarmoire.org/wp-content/uploads/2020/01/Haier-H2F-220WAA.jpg)